Political polling often stops at the numbers:

The industry’s obsession with percentages turns public opinion into a spreadsheet, where nuance - the why behind the what - gets lost in translation. Traditional trackers are great at mapping trends. But when it comes to the real stories, motivations, and shifting priorities that shape voter sentiment, they fall short.

Glaut set out to answer this gap. Using AI-moderated interviews (AIMIs), we built the first qualitative tracker for U.S. politics.

Disclaimer: This demo project utilized samples balanced for age, gender, and ethnicity; however, results are not fully representative of the U.S. electorate. The findings should be interpreted as exploratory and illustrative rather than a statistically robust reflection of national public opinion.

1. Economic and immigration issues dominated

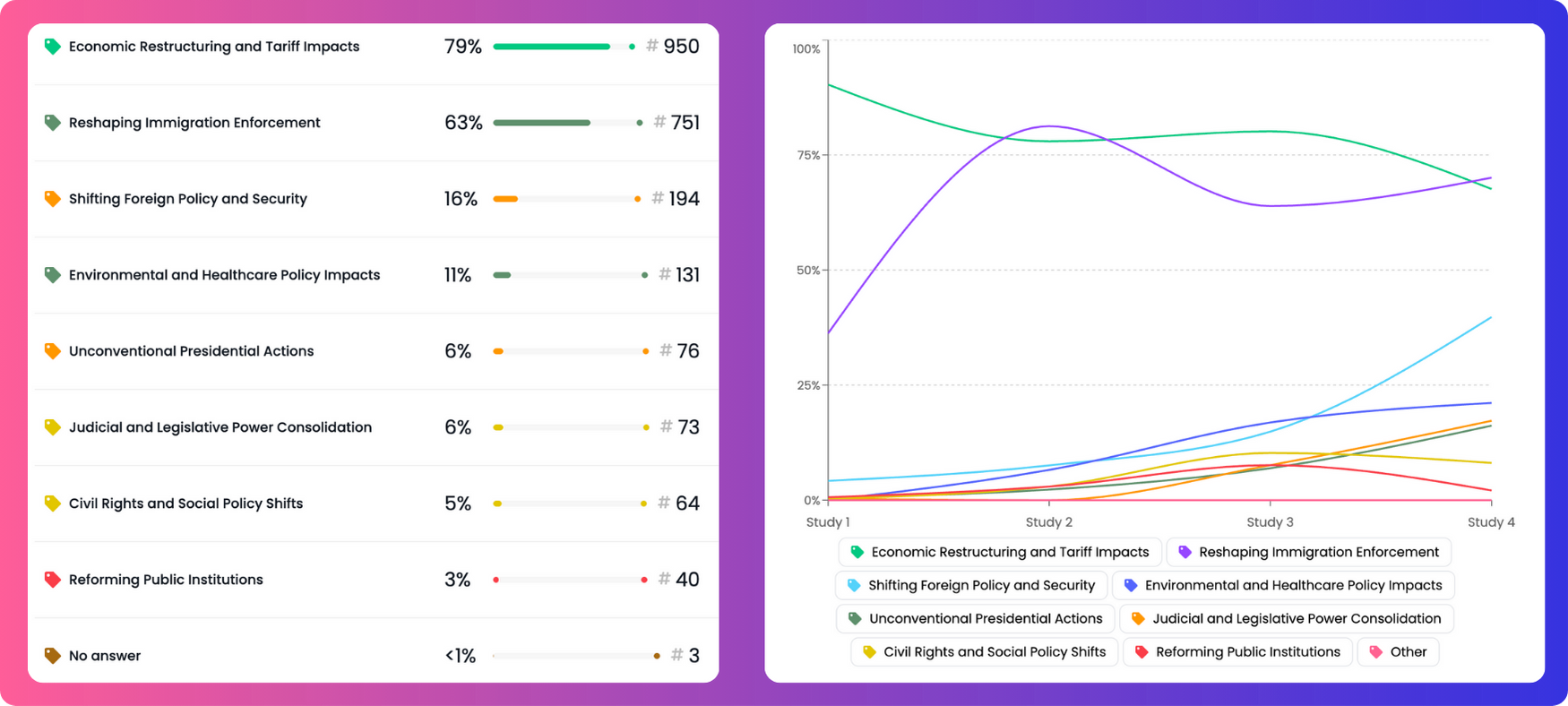

When asked about the biggest actions or policies from recent weeks, voters surfaced 8 organic themes in their own words. Two stood out by far:

Other themes - such as social unity, national security, and climate change - were present but less prominent, highlighting the true priorities that emerged directly from voter voices, not from a pre-set list.

2. Narrative context adds meaning

Instead of a single “right/wrong direction” tally, Glaut’s AIMI approach pieced together sentiment from answers to several open questions. he resulting analysis revealed a predominance of negative feelings about America’s trajectory, providing richer narrative context than a simple yes/no measure.

Glaut’s platform not only matched the reach of traditional research but significantly outperformed static surveys in critical areas (according to a preliminary comparative study conducted):

Glaut’s AI agents also mitigates fraud via voice interaction, interview behavior consistency checks, and interpretative scoring ensuring reliable, trustworthy data every time.

While our findings illuminate real voter narratives, this tracker was a prototype: its balanced (not representative) sample means results should be treated as exploratory. Still, the technology is now proven and ready to power more robust, fully representative studies in politics and beyond.

Glaut’s case is about moving political research toward methods that honor the complexity of public opinion. AIMIs enable organizations to:

Political research deserves tools that go deeper and work smarter. Glaut’s AI-moderated interviews set a new standard: scalable, human, insightful.

Want to see or hear real voter perspectives? Try the interview or read the full report on Glaut. Experience the difference that next-generation qualitative research can make.

AI-moderated voice interviews for insights at scale

Schedule a free demo

Glaut is the only AI-native platform built for agencies and quantitative researchers.

MR firms use Glaut to add qual depth to quant surveys and deliver insights 5x deeper, 20x faster, with AI-moderated voice interviews (AIMIs) in 50+ languages.

Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt ut labore et dolore magna aliqua. Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris nisi ut aliquip ex ea commodo consequat. Duis aute irure dolor in reprehenderit in voluptate velit esse cillum dolore eu fugiat nulla pariatur.

Block quote

Ordered list

Unordered list

Bold text

Emphasis

Superscript

Subscript